MAT Seminar Series 2022 • 2023

Spring 2023

Abstract

Ecosystems are disappearing, but so are our ways of sensing, performing, singing, and expressing ourselves in relation to them. Artist Jakob Kudsk Steensen digitizes vanishing natural environments in collaboration with scientists, authors, artists, performers, and researchers as a way to rejuvenate lost sensibilities toward specific ecosystems. His artworks use advanced technology to create hyper-sensory recreations of natural environments, featuring real-time interactive technologies as a new spatial language for connecting and collaborating.

Bio

Jakob Kudsk Steensen (B. 1987, Denmark) is an artist working with environmental storytelling through 3d animation, sound and immersive installations. He creates poetic interpretations about overlooked natural phenomena through collaborations with field biologists, composers and writers. Jakob has recently exhibited with his major solo exhibition “Berl-Berl” in Berlin at Halle am Berghain, commissioned by LAS, and at Luma Arles with “Liminal Lands” for the “Prelude” exhibition. He was a finalist for the Future Generation Art Prize at the 2019 Venice Biennale. He received the Serpentine Augmented Architecture commission in 2019 to create his work The Deep Listener with Google Arts and Culture. He is the recipient of the best VR graphics for RE-ANIMATED (2019) at the Cinequest Festival for Technology and Cinema, the Prix du Jury (2019) at Les Rencontres Arles, the Webby Award - People’s Choice VR (2018), and the Games for Change Award - Most Innovative (2018), among others.

For more information visit http://www.jakobsteensen.com/

See video recording of the talk here

Abstract

The Distributed Artificial Intelligence Research Institute (DAIR) was launched on December 2, 2021, by Timnit Gebru as a space for independent, interdisciplinary community-rooted AI research, free from Big Tech’s pervasive influence. The institute recently celebrated its 1 year anniversary. Timnit Gebru will discuss DAIR's research philosophy consisting of the following principles: community, trust and time, knowledge production, redistribution, accountability, interrogating power, and imagination. She will discuss the incentive structures that make it difficult to perform ethical AI research and give examples of works at DAIR, hoping to forge a different path.

Bio

Timnit Gebru is the founder and executive director of the Distributed Artificial Intelligence Research Institute (DAIR). Prior to that, she was fired by Google in December 2020 for raising issues of discrimination in the workplace, where she was serving as co-lead of the Ethical AI research team. She received her Ph.D. from Stanford University and did a postdoc at Microsoft Research, New York City, in the FATE (Fairness Accountability Transparency and Ethics in AI) group, where she studied algorithmic bias and the ethical implications underlying projects aiming to gain insights from data.

Timnit also co-founded Black in AI, a nonprofit that works to increase the presence, inclusion, visibility and health of Black people in the field of AI, and is on the board of AddisCoder, a nonprofit dedicated to teaching algorithms and computer programming to Ethiopian high school students, free of charge.

See video recording of the talk here

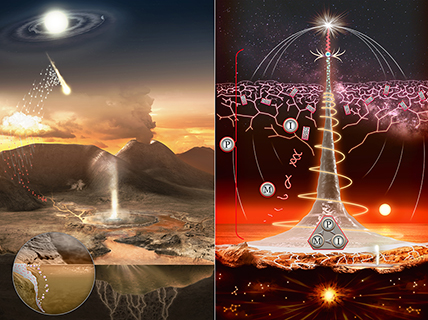

Abstract

How did life begin on the Earth approximately four billion years ago and how might it get started elsewhere in the universe? New science over the past decade suggests that life on Earth started not deep in the oceans but on land, in a 21st Century version of what Charles Darwin originally proposed: a "warm little pond." Our modern setting is a hot spring pool subject to regular cycles of wetting and drying. Feeding this pool is a mix of copious inputs of organics cooking up a proper "primordial soup" to stir populations of protocells into a living matrix of microbes. Can this scenario of our deepest ancestry inform us about the nature of life itself and provide insight into us humans, our technology and our civilizational future? This talk by Dr. Bruce Damer will take us on an end-to-end journey from the first flimsy proto-biological sphere to a possible future for the Earth's biosphere as it extends out into the cosmos.

Bio

Dr. Bruce Damer is Chief Scientist of the BIOTA Institute and a researcher in the Department of Biomolecular Engineering, UC Santa Cruz. His career spans four decades including pioneering work on early human-computer interfaces in the 1980s, virtual worlds and avatars in the 1990s, space mission design for NASA in the 2000s, and developing chemical and combinatorial scenarios for the origin of life since 2010.

See video recording of the talk here

Abstract

Dr. Gaskins will discuss generative art and artificial intelligence or AI art, which are forms of art created using algorithms, mathematical equations, or computer programs to generate images, sounds, animations, or other artistic creations. Generative art can be seen as a collaboration between the artist and the machine, where the artist sets the framework, and the machine creates the artwork within those constraints.

Bio

Dr. Nettrice Gaskins earned a BFA in Computer Graphics with Honors from Pratt Institute in 1992 and an MFA in Art and Technology from the School of the Art Institute of Chicago in 1994. She received a doctorate in Digital Media from Georgia Tech in 2014. Currently, Dr. Gaskins is a 2021 Ford Global Fellow and the assistant director of the Lesley STEAM Learning Lab at Lesley University. Her first full-length book, Techno-Vernacular Creativity and Innovation is available through The MIT Press. Gaskins' AI-generated artworks can be viewed in journals, magazines, museums, and on the Web.

See video recording of the talk here

Abstract

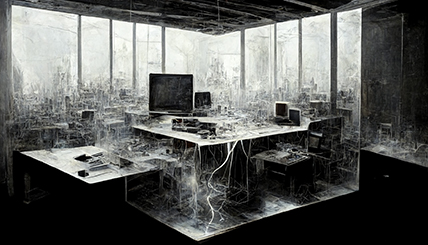

Recent advances in generative and perceptive AI have radically expanded the breadth, scope, and sophistication of human-machine interactions. Whether through creative co-production, confessional communion, or quantified selves under machine observation—we have invited ML systems to participate in our innermost spaces. In this talk I discuss my research into machine cohabitation, exploring the ways that we share space with these technological others. Spanning smart environments, robotic automation, data science, and real-time performance, my projects explore emergent technological possibilities while centering human dynamics of the interactions. Are these acts of high tech ventriloquism, psychological self-stimulation, mediumistic extensions of a creative unconscious, or collaborations with computational others? However we decide these questions of autonomy and agency, the value of these systems lie in what they reveal about human imagination and desire.

Bio

Robert Twomey is an artist and engineer exploring the complex ways we live, work, and learn with machines. Particularly, how emerging technologies impact sites of intimate life: what relationships we engender with machines, what data and algorithms drive these interactions, and how we can foster a critical orientation in developing these possibilities. He addresses these questions through the Machine Cohabitation Lab (cohab-lab.net), as an Assistant Professor at the Johnny Carson Center for Emerging Media Arts, University of Nebraska-Lincoln.

Twomey has presented his work at SIGGRAPH (Best Paper Award), CVPR, ISEA, NeurIPS, the Museum of Contemporary Art San Diego, Nokia Bell Labs Experiments in Art and Technology (E.A.T.), and has been supported by the National Science Foundation, the California Arts Council, Microsoft, Amazon, NVIDIA, and HP. He received his BS from Yale with majors in Art and Biomedical Engineering, his MFA in Visual Arts from UC San Diego, and his Ph.D. in Digital Arts and Experimental Media from the University of Washington. He is an artist in residence with the Arthur C. Clarke Center for Human Imagination at UC San Diego.

For more information visit https://roberttwomey.com

See video recording of the talk here

Abstract

The sublime, a long-sought artistic ideal, embodies grandeur that inspires awe and evokes deep emotional or intellectual responses. How do artists today employ data as a primary material to achieve this elusive quality while creating work that is both enigmatic and captivating? Additionally, how can they craft art experiences that resonate with audiences? By discussing a few of her recent projects, Rebecca Xu shares her exploration and insights on this subject, shedding light on how data-driven art pieces challenge traditional conventions of artistic expression and reshape the quest of the sublime in our digital era.

Bio

Rebecca Ruige Xu teaches computer art as a Professor in the College of Visual and Performing Arts at Syracuse University. Her research interests include artistic data visualization, experimental animation, visual music, interactive installations, digital performance, and virtual reality. Xu’s work has appeared at many international venues, including IEEE VIS Arts Program; SIGGRAPH & SIGGRAPH Asia Art Gallery; ISEA; Ars Electronica; Museum of Contemporary Art, Italy; Los Angeles Center for Digital Art, etc. Xu is the co-founder of China VIS Arts Program. Currently, she serves as the Chair of ACM SIGGRAPH Digital Arts Committee and IEEE VIS’23 Arts Program Co-Chair.

For more information visit http://rebeccaxu.com/

See video recording of the talk here

Abstract

This presentation will report on a selection of projects realized in a class exploring generative AI image synthesis in the fall of 2022 as softwares such as Dalle-2, MidJourney, and Stable Diffusion began to circulate within the image-making communities. The presentation will introduce the concept of the course, and follow with student work.

Bio

George Legrady is Distinguished Professor in the Media Arts & Technology PhD program at UC Santa Barbara where he directs the Experimental Visualization Lab: https://vislab.mat.ucsb.edu His research, pedagogy and artistic practice address the impact of computation on the veracity of data, specifically the photographic image. A pioneer in the field of digital media arts with an emphasis on digital photography, data visualization, interactive installation, machine-learning and natural language processing, his projects and research have been presented internationally in fine art digital media arts installations, engineering conferences and public commissions.

See video recording of the talk here

Zoom Link:https://ucsb.zoom.us/my/ky.zoom

Abstract

Some contemporary thinkers argue that the image is supplanting the drawing as the currency of contemporary architectural practice. This is not just a theoretical position; as we are all well aware, it is possible to envision, detail, and build buildings from information extracted from digital models presented as pictures on a computer screen. But even as an architecture of digital objects and images is increasingly possible, there has been a recent resurgence of enthusiasm for drawing, and specifically for techniques of projection, in late 20th century and contemporary discourse. This talk is about drawing practices and how real and imagined material characteristics continue to inform architectural work- drawn, built, and modeled.

Bio

Emily White is an artist and architect who works with materials ranging from foam to inflatables to sheet metal. She has exhibited at the J. Paul Getty Museum, the Museum of Contemporary Art Los Angeles, and Materials & Applications and has a permanent project installed in the Fort Lauderdale International Airport. She teaches design studios in Architecture at Cal Poly, San Luis Obispo. She has a MARCH from the Southern California Institute of Architecture (SCI-Arc) and a BA from Barnard College, Columbia University.

For more information visit https://emilywhiteprojects.com/

Zoom Link: https://ucsb.zoom.us/j/9314307354

Abstract

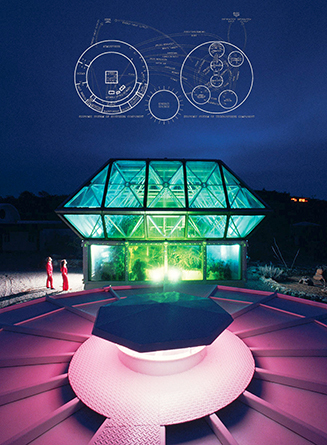

Systems diagrams are powerful tools that may be employed in a multitude of ways to understand and actualize complex phenomena. But like their cousins, algorithms, their scripts contain concealed biases, often in the form of feedback loop logics that can evolve into circumstances and/or entities that catch their authors off guard. This research examines how the “Economic System of B2” diagram translated the Biospherians’ rarefied synthetic environmental epistemology into a mini-Anthropocene ontology, acted as a ‘Rosetta Stone’ that facilitated interdisciplinary and quantitative design processes, produced the tightest building envelope ever constructed at its scale, and ultimately scripted the life-support generating performances of the crews inside Biosphere 2’s [B2] enclosure missions. It draws parallels between the Biospherians’ “Human Experiment” design approaches, heavily informed by their training as environmental managers, explorers, and Thespians, and their performances within B2, which increasingly manifest through tensions between the technocratic tendencies of their ‘scripted’ and ‘rehearsed’ biogeochemical molecular economy, and the emergent circumstances that necessitated their ‘off-script’ ‘improvisations.’ Ultimately, I argue that Art, Engineering, and Life reconfigured within B2 in surprising ways, which may reveal and forecast our own performances within the increasingly circular and accelerating evolution of the Anthropocene.

Bio

Meredith Sattler is currently an Assistant Professor of Architecture at Cal Poly San Luis Obispo, a PhD candidate in Science and Technology Studies at Virginia Tech, and a LEED BD+C. Her current research and teaching interests include conceptualizations of dynamic sustainable architectural systems in historic and current contexts; interdisciplinary structure and practice within functional territories between design and the ecological sciences; and the designer’s influence on the health of natural environment-technology-human interactions. She is founder and lead designer of cambioform, a furniture and environmental design studio, received the Ellen Battel Stoeckel Fellowship at the Norfolk Yale Summer School of Art, and has exhibited and published internationally. She holds Masters of Architecture and Masters of Environmental Management degrees from Yale University, and a Bachelor of Arts from Vassar College.

For more information visit https://meredith-sattler.net/

Winter 2023

Abstract

This talk will explore my approach to working with technology in bad faith. The technologies we rely on are meant to provide frictionless solutions to problems, and when things work as they should, these tools are meant to recede from the user’s attention. But when we intend to break technological tools and processes, their logic and the ideologies that they conceal become more apparent or present to hand. I will discuss recent work, including work in progress, that brings my interest in the obtuse and the obstinate to AI, which has recently included an effort to create personal AI oracles for exploring the aesthetics of subjectivity after Machine Learning.

Bio

Mitchell Akiyama is a Toronto-based scholar, composer, and artist. His eclectic body of work includes writings about sound, metaphors, animals, and media technologies; scores for film and dance; and objects and installations that trouble received ideas about history, perception, and sensory experience. He holds a PhD in communications from McGill University and an MFA from Concordia University and is Assistant Professor of Visual Studies in the Daniels Faculty of Architecture, Landscape, and Design at the University of Toronto.

See video recording of the talk here

For more information visit http://www.mitchellakiyama.com/

Abstract

In the context of the American landscape, the black body has historically been viewed as an “earth machine”. A technology used to lift the soil, root the flora, sow the seeds, break the stone, and pump the water. Before John Deers and Bob Cats, there were Black slaves. The till, plow, jackhammer, excavator, piston, and axe condensed into a single metric. In addition to an exploitative capitalistic enterprise, slavery was also a cruel geoengineering project.

This talk reframes the historic narrative of the Black identity as a landscape technology through the exploration of a fictional world entitled Mojo. Distinctions between blackness, landscape, and technology, are blurred allowing black creativity, expression, and spirituality to materialize on an ecological scale. What would our landscapes look like if shaped by the values of a different culture? We unpack this question through a collection of CGI Afrofuturist vignettes that engage storytelling and science fiction as critical means to envision new trajectories of black identity.

Bio

Visual artist Jeremy Kamal engages CGI storytelling to explore relationships between Blackness, technology, and ecology.

He is a design faculty at the Southern California Institute of Architecture. He studied Landscape Architecture at Harvard GSD and received a Master of Arts in SCI-Arc’s postgraduate Fiction and Entertainment program. His work uses themes of landscape and fiction to envision speculative environments in which Black life is at the center of geological phenomena. Through fiction, Kamal is interested in making explicit the connection between cultural abstractions and ecological realities.

His focus on landscape-centric narratives is the driving force behind the worlds he brings to life through animation, game engine technology, music, and storytelling. Kamal's work offers another perspective on the way we think about space and the cultural behaviors that shape it.

For more information visit jeremykamal.com/

See video recording of the talk here

Abstract

This talk will present a speculation rooted in my experience weaving electronics and developing software for weaving electronics. I will introduce the basics of woven structure in terms of its mechanical properties as well as methods by which it is designed and manipulated. I will also present some of the exciting opportunities for design and interaction when we consider weaving as a method of electronics production: such as the ability for textile structures to unravel, mended, and to be continually modified. Each of these underlying discussions will frame a provocation about alternative ways we might build, use, and unbuild our electronic products.

Bio

Laura Devendorf, assistant professor of information science with the ATLAS Institute, is an artist and technologist working predominantly in human-computer interaction and design research. She designs and develops systems that embody alternative visions for human-machine relations within creative practice. Her recent work focuses on smart textiles—a project that interweaves the production of computational design tools with cultural reflections on gendered forms of labor and visions for how wearable technology could shape how we perceive lived environments. Laura directs the Unstable Design Lab. She earned bachelors' degrees in studio art and computer science from the University of California Santa Barbara before earning her PhD at UC Berkeley School of Information. She has worked in the fields of sustainable fashion, design and engineering. Her research has been funded by the National Science Foundation, has been featured on National Public Radio, and has received multiple best paper awards at top conferences in the field of human-computer interaction.

For more information visit http://ecmc.rochester.edu/rdm/

See video recording of the talk here

Presence (2020) by Erin Gee and Jen Kutler. Networked

biofeedback music performance for affective

biosensors and touch-stimulator devices.

Abstract

Since the 1960s, composers have used technology to interrupt, amplify, and distort the relationship between music and its psychosomatic perception, seemingly dissolving Cartesian dualisms between mind/body while ushering in a new era of musical experience. At the borders of "new music" and "electronic music,” biofeedback music is often articulated through futurist, cybernetic, and cyborg theory. I argue that the revolutionary promise of biofeedback music is compromised by its situatedness in traditional systems of value typical to European art music, in which patriarchal, humanist, and colonial bias quietly dominate the technological imaginary through metaphor.

In this presentation I contextualize biofeedback music history through the work of feminist musicologists and art historians, and also share examples of my own work in affective biofeedback: the development of low-cost and accessible open-source technologies, as well as the development of performance methods influenced by hypnosis, ASMR, and method acting as applied to choral music, robotic instruments, and VR interfaces.

Articulating biofeedback composition through principles of emotional reproduction and emotional labor, I emphasize wetware technologies (body hacking, social connection, empathetic and affective indeterminacy, and psychosomatic performance practice) as crucial compliments to biofeedback hardware and software.

Bio

Canadian performance artist and composer Erin Gee ( TIO’TIA:KE – MONTREAL) takes inspiration from her experience as a vocalist and applies it to poetic and sensorial technologies, likening the vibration of vocal folds to electricity and data across systems, or vibrations across matter. Gee is a DIY expert in affective biofeedback, highlighting concepts like emotional labor, emotional measurement, emotional performance, and emotional reproduction in her work that spans artificial intelligence technology, vocal and electronic music, VR, networked performance, and robotics. Gee’s work has been featured in museums, new media art festivals, and music concert halls alike. She is currently a Social Studies and Humanities Research Council Canada Graduate Scholar at Université de Montréal, where she researches feminist methods for biofeedback music.

Images Credits: Portrait by Elody Libe (2019), Presence (2020) by Erin Gee and Jen Kutler. Networked biofeedback music performance for affective biosensors and touch-stimulator devices.

For more information visit https://eringee.net/

See video recording of the talk here

In Person: Elings Hall, room 2611

Abstract

Game audio is one of the most complex audio applications incorporating audio DSP, spatial and physical modeling, linear audio production, and interactive sound design. The next generation of game audio engines offers a unique tool capable of creating distributable works of interactive audio alongside state-of-the-art animation, physics, visual effects, and graphics pipelines. In this talk, we will explore the expressiveness of game audio engines and consider the problems they were designed to solve. We will also dive into MetaSound, a next generation interactive DSP graph tool for game audio. We will discuss its design, including directed acyclic flow graphs, sample accurate timing, flexible subgraph composition, and low latency interactivity from a perspective of computational performance and usability

Aaron McLeran's Bio

Aaron McLeran is a 2009 alumnus from MAT (2009) and has more background in physics and music. He was a sound designer and composer in video games before becoming an audio programmer. He's now the Director of the Audio Engine at Epic Games, working on Unreal Engine 5. He's worked on game audio for Spore, Dead Space, Call of Duty, Guild Wars 2, Paragon, and Fortnite, as well as all of Epic's tech demos and many special projects since 2014.

Phillip Popp's Bio

Phillip Popp works at the nexus of audio tools, DSP, and machine learning. He has over a decade of professional experience researching and developing a wide variety of audio analysis, machine learning, personalization, and real-time synthesis technologies. As a Principal Audio Programmer at Epic Games, he advances the state-of-the-art in game audio by building flexible, expressive, and performant tools to power the next generation of interactive audio experiences.

Abstract

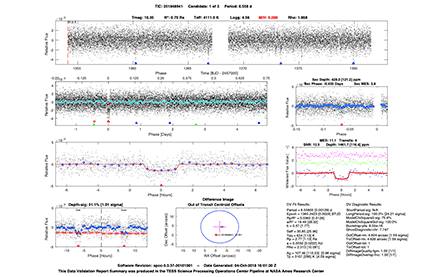

The first planet outside our own solar system was discovered almost thirty years ago in an extremely unlikely place, orbiting a pulsar, and the first exoplanet orbiting a Sun-like star was discovered nearly 26 years ago. In the time since, we’ve detected over 5000 planets and over 75% of these have been detected by transit surveys. The Kepler Mission, launched in 2009, has found the lion’s share of these exoplanets (>3200), and demonstrated that each star in the night sky has, on average, at least one planet. Kepler’s success spurred NASA and ESA to select several exoplanet-themed missions to move the field of exoplanet science forward from discovery to characterization: How do these planets form and evolve? What is the structure and composition of the atmospheres and interiors of these planets? Can we detect biomarkers in the atmospheres of these planets and learn the answer to the fundamental question, are we alone? NASA selected the Transiting Exoplanet Survey Satellite (TESS) in 2014 to conduct a nearly all-sky survey for transiting planets with the goal of identifying at least 50 small planets (<4 earth) with measured masses that can be followed up by large telescopic assets, such as the upcoming James Webb Space Telescope. TESS has discovered 285 exoplanets so far, 104 of which are smaller than 2.5 REarth with measured masses. In this talk I will describe how we detect weak transit signatures in noisy but beautiful transit survey data sets and present some of the most compelling discoveries made so far by Kepler and TESS.

Bio

Jon Jenkins is a research scientist and project manager at NASA Ames Research Center in the Advanced Supercomputing Division where he conducts research on data processing and detection algorithms for discovering transiting extrasolar planets. He is the co-investigator for data processing for the Kepler Mission, and for NASA’s TESS Mission, launched in 2018 to identify Earth’s nearest neighbors for follow-up and characterization. Dr. Jenkins led the design, development, and operations of the science data pipelines for both Kepler and TESS. He received a Bachelor’s degree in Electrical Engineering, a Bachelor of Science degree in Applied Mathematics, a Master of Science degree in Electrical Engineering and a Ph.D. in Electrical Engineering from the Georgia Institute of Technology in Atlanta, Georgia.

See video recording of the talk here

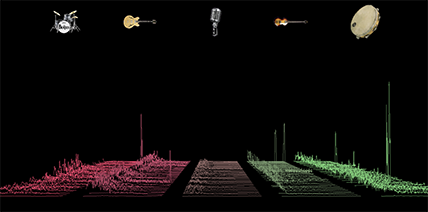

Abstract

The opportunities for machine learning to intersect with music are vast, with many ways to approach the transcription of polyphonic music. So what can—and should--artificial intelligence do with the transcription itself? In this talk, I will give a broad overview of some of the software libraries available to retrieve information from music, and we will speculate together on how machine learning might be able to aide music composition.

Bio

Gina Collecchia is an audio scientist specializing in music information retrieval, room acoustics, and spatial audio. She has worked as a software engineer at SoundHound, Jaunt VR, Apple, and most recently, Splice. She holds her bachelor’s degree in mathematics from Reed College, and a masters in music technology from Stanford University. Her senior thesis at Reed entitled The Entropy of Music Classification would lead to the publication of the book Numbers and Notes: An Introduction to Music Technology in 2012 by PSI Press, founded in 2009 by the physicist Richard Crandall. Though once a happy and enduring dweller of the Bay Area, she now lives in Brooklyn.

For more information visit ginacollecchia.com

See video recording of the talk here

Fall 2022

Abstract

Many of us use smartphones and rely on tools like auto-complete and spelling auto-correct to make using these devices more pleasant, but building these tools creates a conundrum. On the one hand, the machine-learning algorithms used to provide these features require data to learn from, but on the other hand, who among us is willing to send a carbon copy of all our text messages to device manufacturers to provide that data? In this talk, we show a surprising paradox discovered roughly 20 years ago: it is possible to learn from user data in the aggregate, while mathematically provably maintaining privacy at the individual level. We then present a recent private mechanism of our own for this task, based on projective geometry (popularized by Brunelleschi during the Italian Renaissance).

This talk is based on joint work with Vitaly Feldman (Apple), Huy Le Nguyen (Northeastern), and Kunal Talwar (Apple).

Bio

Jelani Nelson is a Professor in the Department of Electrical Engineering and Computer Sciences at UC Berkeley, and also a part-time Research Scientist at Google. His research interests include sketching and streaming algorithms, random projections and their applications to randomized linear algebra and compressed sensing, and differential privacy. He is a recipient of the Presidential Early Career Award for Scientists and Engineers, a Sloan Research Fellowship, and Best Paper Awards at PODS 2010 and 2022. He is also Founder and President of AddisCoder, Inc., which has provided free algorithms training to over 500 Ethiopian high school students since 2011, and which is co-launching a similar "JamCoders" program in Kingston, Jamaica in Summer 2022.

See video recording of the talk here

Abstract

Are we alone in the universe? This is the existential question at the heart of the SETI Institute’s research - both for its scientists as well as for the artists in its AIR program. Bettina Forget will discuss various modes of engagement between art, science, and technology by showcasing a selection of projects across the disciplines of new media arts, performance, AI, and storytelling.

Bio

Bettina Forget is the Director of the SETI Institute’s Artist-in-Residence (AIR) program. Her creative practice and academic research examine the re-contextualization of art and science, and how transdisciplinary education may disrupt gender stereotypes. Bettina works with traditional as well as new media arts, focusing on astrobiology, sci-fi, and feminism.

For more information visit bettinaforget.com

See video recording of the talk here

Abstract

I will discuss and describe my new composition, Musics of the Sphere, to be presented in the AlloSphere on Oct 13 and 14, 2022. The work is a 57 minutes-long fixed-media computer music composition in six tracks of spatially modulated sounds. The sounds on each track move between six locations: Up (above) Down (below), North (in front), East (to the right), South (in back), and West (to the left). Therefore, the Allosphere is the perfect venue to present the piece where loudspeakers are placed around the listeners to implement the six spatial locations.

Musics of the Sphere is a celebration of musics from all over the world. Over 150 excerpts of all types of music from Africa, the Americas, Asia, and Europe comprise the sounds in each track. These musics are heard from without modification to complete transformation via a host of computer music techniques.

This composition is not the first of mine that uses “world musics.” In 1973, I composed a 45-minute electronic piece in four tracks called Thunder of Spring over distant Mountains. This piece was based on seven pieces of Asian and south-Asian music. The talk I will discuss the differences between Thunder and Musics from three points of view: the technical sound production; the structures of each; and the way each piece implements my thinking about music from a ethnomusical perspective.

Bio

Robert Morris (1943) is an internationally known composer and music scholar, having written over 180 compositions including computer and improvisational music, music to be performed out of doors, four books, and over 70 articles and reviews. Since 1980 he has taught at the Eastman School of Music, University of Rochester as Professor of Composition and affiliate member of the Theory and Musicology Departments, and at present interim Chair of the Composition Department.

His many compositions have been performed in North America, Europe, Australia, and Japan. Morris's music is recorded on Albany Records, Attacca, Centaur, Composers Recordings Incorporated, Fanfare, Music and Arts, Music Gallery Editions, New World, Neuma, Open Space, and Renova.

For more information visit http://ecmc.rochester.edu/rdm/

See video recording of the talk here

Abstract

In this talk I will show examples of how attempts to simulate and exaggerate the qualities of analog systems have led to new, often unexpected, and unexplored tools for sound design. We will discuss "supernormal stimuli" and hyperreality, and how these concepts can be used as a lens for common sound processing techniques as well as a framework for developing future algorithms. We will cover lesser-known tricks for adding an organic feel into digital systems and what areas seem ripe for future exploration, using examples from our own product line as well as the work of other companies throughout the audio industry.

Topics will include new approaches to drum sampling and modeling, vinyl simulation, dynamics processing, neuroaesthetics, analog modeling, non-linearity, and timbral expansion.

Bio

Joshua Dickinson is a founder of Unfiltered Audio, a company specializing in creative audio effects and synthesizers. He is an instructor at Berkeley City College, where he teaches courses on topics such as graphic visualization, HCI, games, and entrepreneurship.

For more information visit UnfilteredAudio.com and Amusesmile.com

See video recording of the talk here

Abstract

Humans have always looked to nature for inspiration. As artists, we have done so in creating a family of “Artificial Natures”: interactive art installations surrounding humans with biologically-inspired complex systems experienced in immersive mixed reality. Through building immersive artificial ecologies, we are questioning how we may coexist with non-human beings in ways that are more abundantly curious, playful, and mutually rewarding. In this talk, from an innate curiosity and aesthetic survival instinct, I will share how we are bringing alternate worlds in superposition to us in order to shatter the perspective of humans as the center of the world and to deepen our understanding of the complex intertwined connections in dynamic living systems.

Bio

Haru Hyunkyung JI is a media artist and co-creator of the research project “Artificial Nature”. She holds a Ph.D. in Media Arts and Technology from the University of California Santa Barbara and is an Associate Professor in the Digital Futures, Experimental Animation, and Graduate programs at the OCAD University in Toronto, Canada. She works as jury member for SIGGRAPH Art Papers, ISEA, and SIGCHI DIS and has published articles in Leonardo, Stream04, IEEE Computer Graphics and Applications among others.

Artificial Nature is an installation series and research project by Haru JI and Graham WAKEFIELD, creating a family of interactive art installations surrounding humans with biologically-inspired complex systems experienced in immersive mixed reality. Since 2007, Artificial Nature installations have shown at international venues including La Gaite Lyrique (Paris), ZKM (Karlsruhe), CAFA (Beijing), MOXI and the AlloSphere (Santa Barbara), and City Hall (Seoul, South Korea), festivals such as Microwave (Hong Kong), Currents (Santa Fe), and Digital Art Festival (Taipei), conferences such as SIGGRAPH (Yokohama, Vancouver), ISEA (Singapore), and EvoWorkshops (Tubingen), as well as recognition such as the international 2015 VIDA 16.0 Art & Artificial Life competition and the 2017 Kaleidoscope Virtual Reality showcase.

For more information visit https://artificialnature.net/

See video recording of the talk here

Abstract

In this talk, I present new designs for 1-bit synthesizers, audio effects, and signal mixers which transcend classical limitations of the (historically, very limited) format, creating new possibilities for musical expression. After reviewing some of the classical examples of 1-bit music, I will describe my novel approach to designing novel 1-bit musical tools. These include, e.g., 1-bit stochastic wavetables, resonant and comb "filters," artificial reverberation, advanced multiplexor- and digital-logic-based signal mixers, and advanced binary bitcrushers. Special emphasis will be placed on a new variant of sparse noise I developed called “Crushed Velvet Noise,” which is especially useful for 1-bit music.

Bio

Dr. Kurt James Werner conducts research related to virtual analog and circuit modeling, the history of music technology (especially drum machine voice circuits), 1-bit music, circuit bending, & sound synthesis more broadly, and sometimes composes too. As part of his Ph.D. in Computer-Based Music Theory and Acoustics from Stanford University's Center for Computer Research in Music and Acoustics (CCRMA), he wrote a doctoral dissertation “Virtual Analog Modeling of Audio Circuitry Using Wave Digital Filters.” This greatly expanded the class of circuits that can be modeled using the Wave Digital Filter approach, using the classic Roland TR-808 bass drum circuit as a case study. Currently based out of Somerville, MA, he was formerly an Assistant Professor of Audio at the Sonic Arts Research Centre (SARC) of Queen's University Belfast and a Research Engineer at iZotope, Inc.

For more information visit Prof. Werner's faculty page.

Abstract

The advancements in machine learning and, in particular, the recent breakthrough of artificial neural networks, has promoted novel art practices in which computers play a fundamental role to support & enhance human creativity. Alongside other arts, music and dance have also benefited from the development of machine learning and artificial intelligence to support creativity, for tasks ranging from music generation to augmented dance. This talk will present an overview of my research, focused on the intersection of artistic creation and performance, mathematical modelling, machine learning and human-computer interaction – and on their entwined co-evolution.

Bio

Carmine Emanuele Cella is an internationally renowned composer with advanced studies in applied mathematics. He is an assistant professor in music and technology at CNMAT, University of California, Berkeley. He studied at the Conservatory of Music G. Rossini in Italy obtaining diplomas in piano, computer music, and composition, and then studied composition with Azio Corghi at Accademia S. Cecilia in Rome; he also studied philosophy and mathematics and got a Ph.D. in applied mathematics at the University of Bologna working on symbolic representations of music.

For more information visit Prof. Cella's web page.

See video recording of the talk here

Zoom Link: https://ucsb.zoom.us/j/9314307354

Abstract

The gen~ environment for Max/MSP lets us work on sonic algorithms down to sample and subsample levels. Written a decade ago during my doctoral research at MAT, UCSB, it has become a widely used platform for sonic experimentation, interactive arts, product design, and music making by artists such as Autechre, Robert Henke, and Jim O’Rourke. At its heart is the capacity to write whole algorithms that are processed one sample at a time, allowing unique access to filter, oscillator and other micro-level synthesis designs, and where each edit made while patching seamlessly regenerates optimized machine code under the hood (and which can be exported for use elsewhere). In this talk I will introduce the gen~ environment, including how and why it was developed, but also where and how it can be applied and what it has inspired. This will draw material from a new book about sonic thinking with signals and algorithmic patterns, with a gamut of synthesis and audio processing examples demystified through patching with gen~.

Bio

Graham Wakefield is an Associate Professor and Canada Research Chair in the department of Computational Arts at York University, where he leads the Alice Lab, dedicated to computational art practice and software development in mixed/hybrid reality. His ongoing research practice applies a deep commitment to the open-endedness of computation—as an art material—as expressed both in new software for artists and musicians (such as the gen~ environment for Max/MSP), as well as immersive artworks of biologically-inspired systems (working with Haru Ji as Artificial Nature). These installations have been exhibited in many international venues and events, including La Gaîté Lyrique/Paris and ZKM/Germany, and his research has been published in the Computer Music Journal, IEEE Computer Graphics & Applications, the International Journal of Human-Computer Studies, Leonardo, ICMC, NIME, SIGGRRAPH, ISEA, EvoWorkshops, and many more.

For more information visit Prof. Wakefield's faculty page.